ai tools

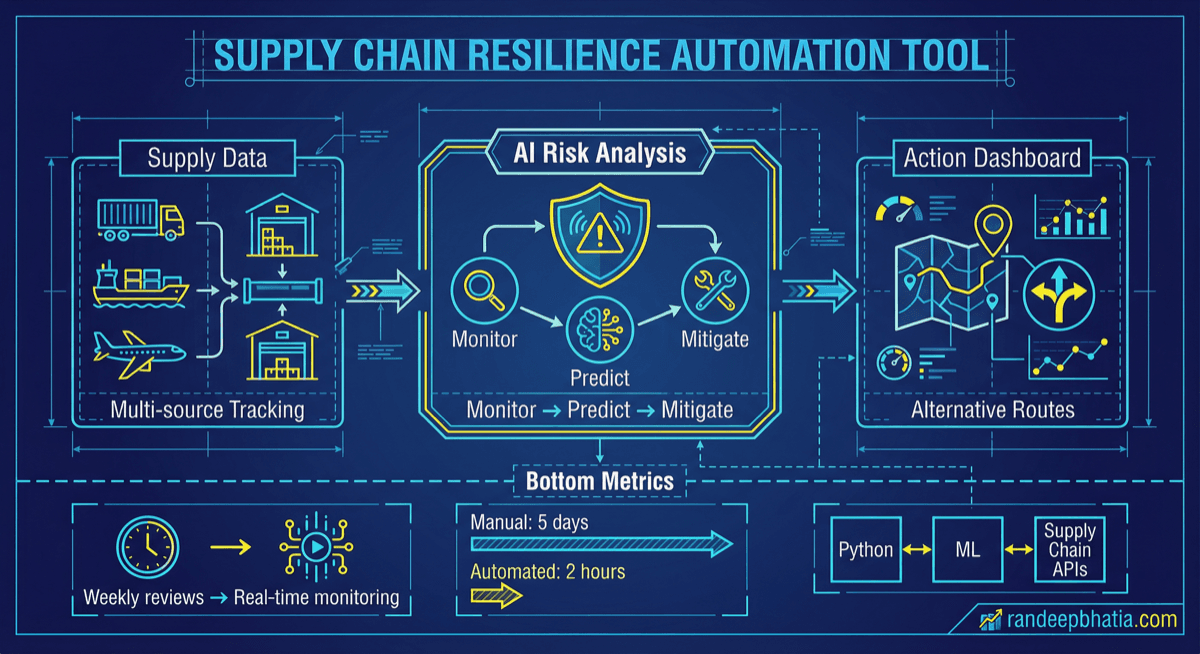

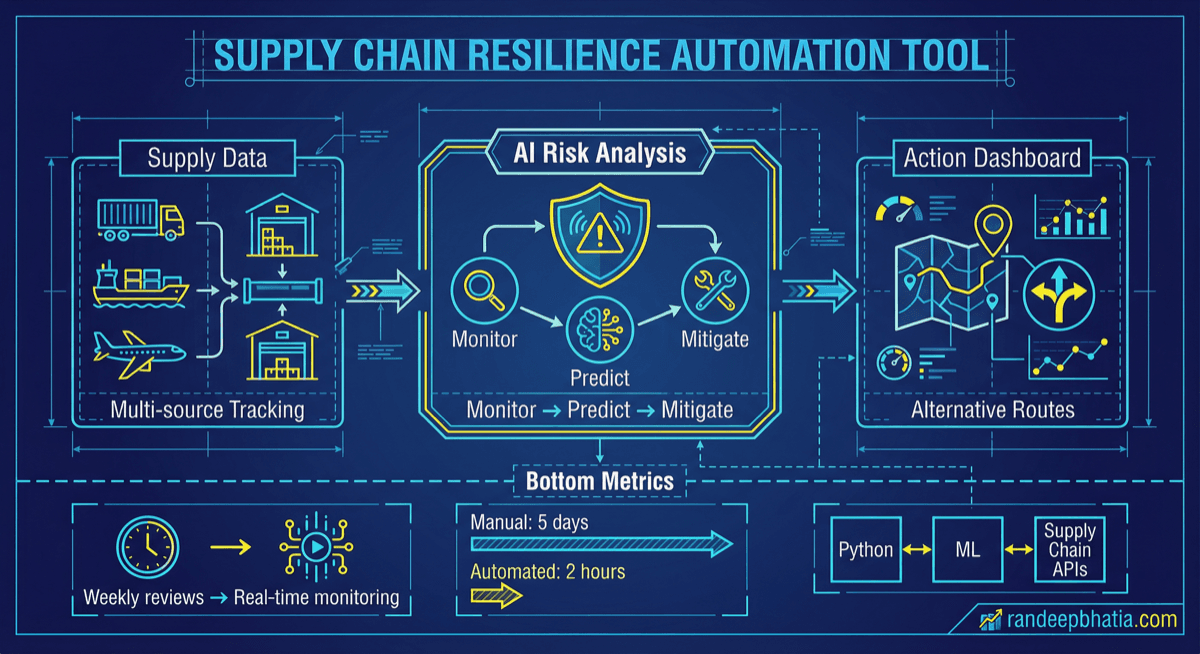

Supply Chain Resilience Automation

Technical automation blueprint for supply chain resilience. Step-by-step guide for implementing AI-powered workflows.

2025-06-17

View Full Size

Technical automation blueprint for supply chain resilience. Step-by-step guide for implementing AI-powered workflows.

2025-06-17