ai tools

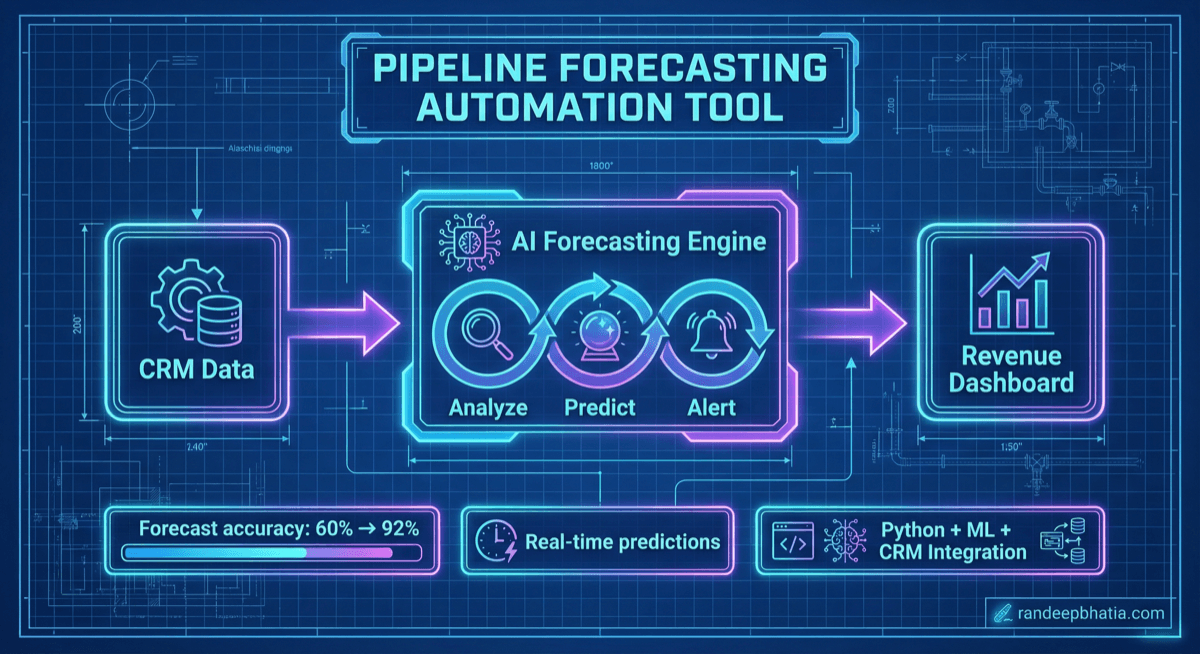

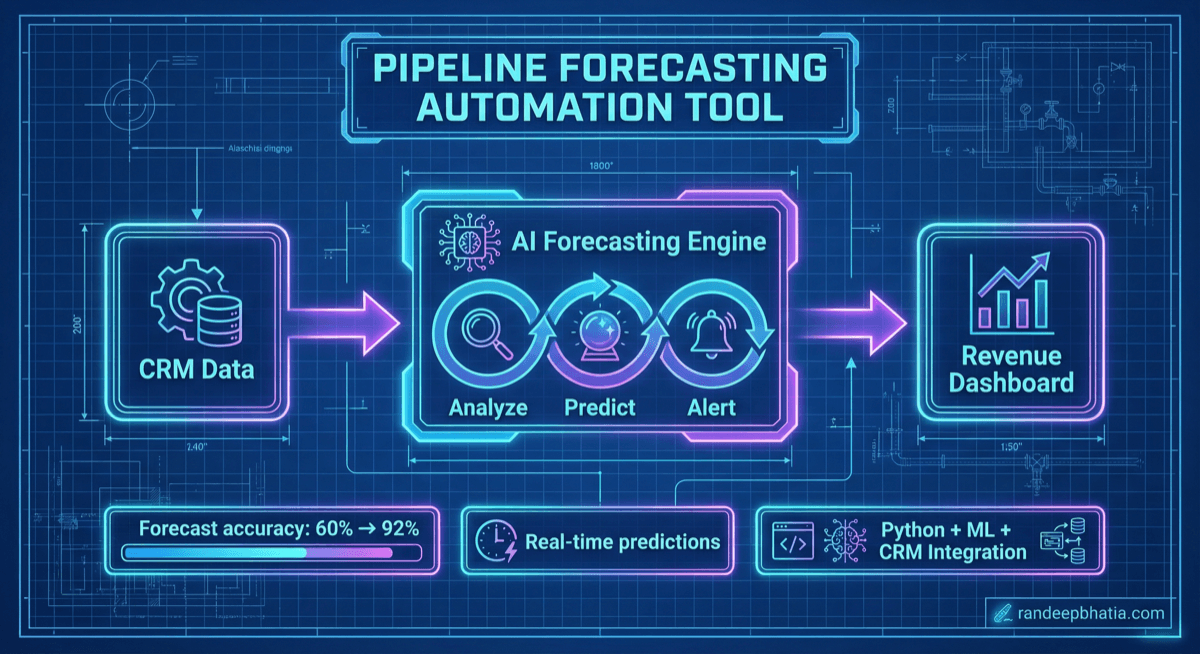

Pipeline Forecasting Automation

Technical automation blueprint for pipeline forecasting. Step-by-step guide for implementing AI-powered workflows.

2025-10-14

View Full Size

Technical automation blueprint for pipeline forecasting. Step-by-step guide for implementing AI-powered workflows.

2025-10-14