ai architecture

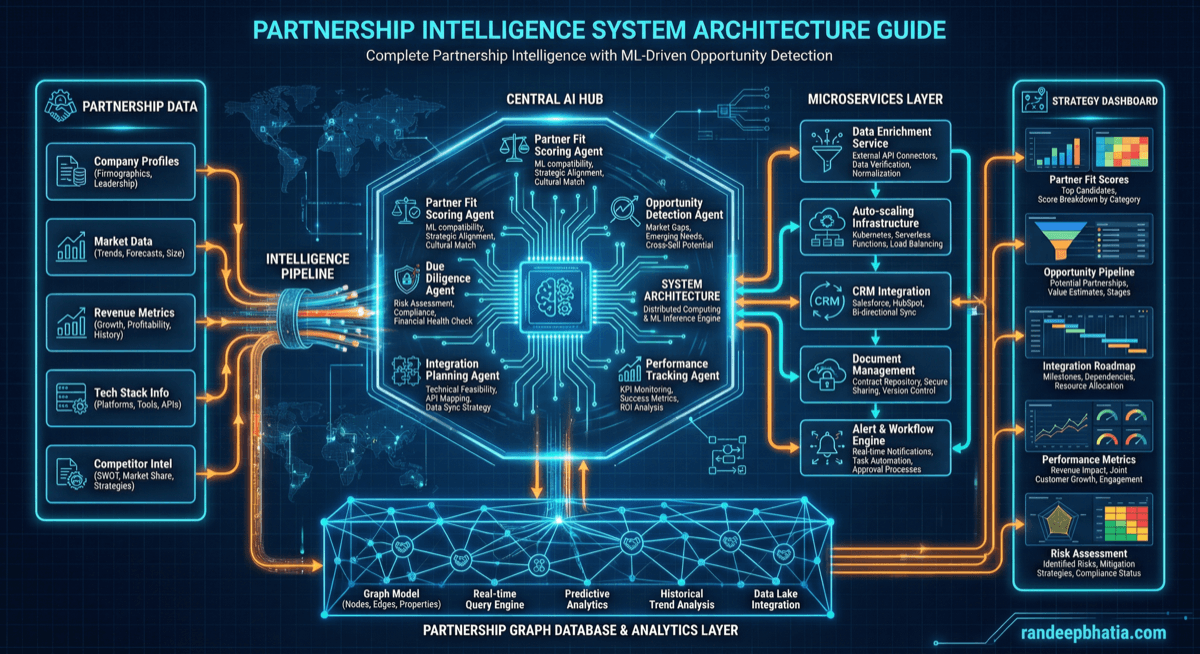

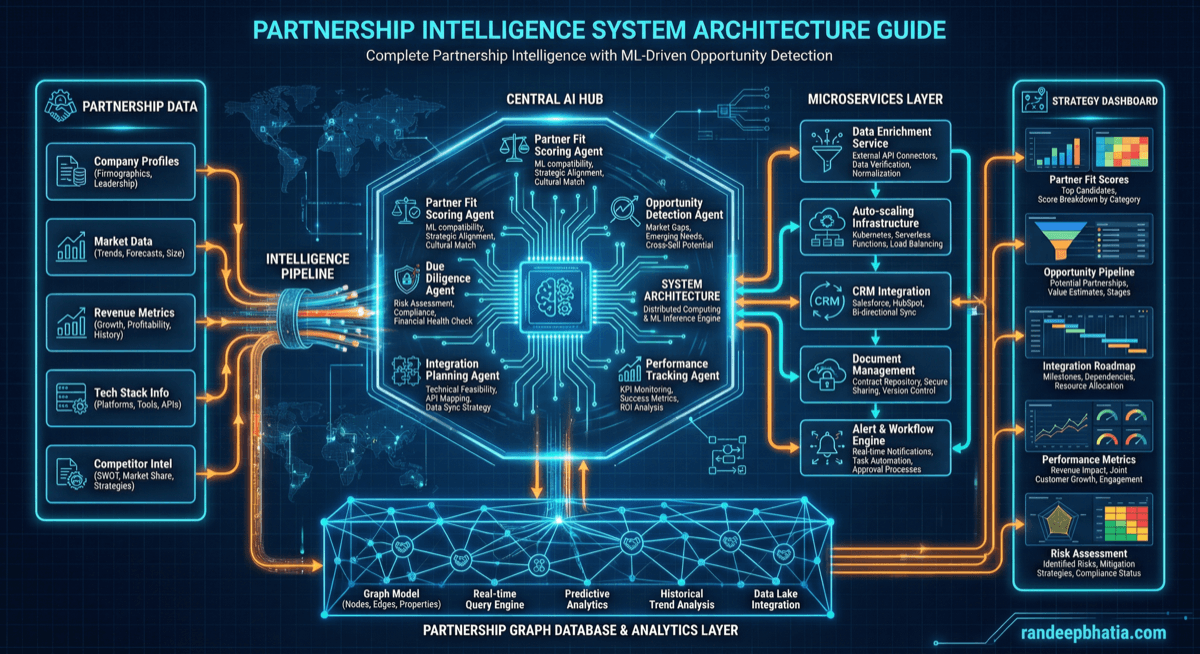

Partnerships Alliances System Architecture

Production-ready system architecture for partnerships alliances. Includes component design, data flow patterns, scaling strategies, and security considerations.

2025-10-30

View Full Size

Production-ready system architecture for partnerships alliances. Includes component design, data flow patterns, scaling strategies, and security considerations.

2025-10-30